The ELECTRA model introduces a novel approach to pre-training by leveraging a Replaced Token Detection (RTD) mechanism instead of the traditional Masked Language Model (MLM) used in models like BERT. This review will cover the model’s methodology, efficiency improvements, and its implications for the field of Natural Language Processing (NLP).

1. Introduction

ELECTRA = Efficiently Learning an Encoder that Classifies Token Replacements Accurately

ELECTRA proposes a more efficient method than the traditional Masked Language Model (MLM) approach, aiming to enhance natural language understanding by training the encoder to accurately classify replaced tokens within a sentence.

Before starting the review, it's important to understand the pre-training methodology used in traditional NLP tasks.

Models like BERT, which have been widely used, typically rely on the MLM (Masked Language Model) approach for pre-training. However, this approach has several issues:

Issues with BERT's MLM Approach

- Mismatch between Training and Inference: During training, the model learns with [MASK] tokens, but during inference, these tokens are absent, leading to a discrepancy.

- Limited Training Data Utilization: Only about 15% of each example is used for learning, which limits the model's ability to fully leverage the data.

- High Computational Cost: The MLM approach still requires a significant amount of computation for effective training.

To address these issues, this paper proposes RTD (Replaced Token Detection) as an alternative.

In this approach, the model performs a pre-training task where it learns to distinguish between real input tokens and plausible fake tokens.

→ This method resolves BERT's mismatch problem (the issue that arises when masked data is used during the pre-training phase, but the original sentence without [MASK] tokens is used during fine-tuning).

Another noteworthy aspect of ELECTRA is its use of the Generator-Discriminator architecture from GANs. Unlike the traditional MLM approach, the generator replaces some tokens, and the discriminator's role is to identify these replaced tokens. While this structure borrows the concept from GANs, it is distinct in that it prioritizes discrimination over sentence generation.

→ Let’s now examine how these methods contribute to improving training performance.

Pre-training method

1. Replaced Token Detection

The image above illustrates the overall architecture of ELECTRA, which resembles the structure of a GAN. Now, let’s take a closer look at how each of the Generator and Discriminator components operates.

1. For the input x=[x1,…,xn], determine the set of positions to mask, m=[m1,…,mk].

2. Replace the input tokens at the selected positions with [MASK].

3. Predict the original tokens of the masked input tokens, and then insert replacement tokens through sampling. (For a given position , output the probability of generating a specific token through the softmax layer).

Here, represents the token embedding.

- Instead of replacing the masked tokens with [MASK], replace them with tokens sampled from the generator’s softmax distribution pG(xt∣x) to create a corrupted example x^corruptx.

- Then, train the model to predict which tokens in x^corrupt match the original input X.

Now, let's look into the loss function.

- In the Generator’s loss function, the model predicts the token at each masked position ii within the masked input x^masked. This loss function minimizes the log probability of the predicted token for each masked position, guiding the Generator to accurately restore the masked tokens.

- In the Discriminator’s loss function, the model assigns scores to tokens, distinguishing between real and fake ones. This setup is similar to the loss function seen in GANs.

Key Point: Unlike BERT’s MLM approach, which only accumulates loss for masked tokens, ELECTRA’s Discriminator calculates loss for all tokens. By evaluating loss across the entire input, ELECTRA can learn from more information than BERT, making training more efficient while using the same amount of data and computational resources. Consequently, this method allows ELECTRA to utilize every token during training, leading to improved performance.

This approach directly addresses one of the key limitations in the traditional MLM approach that we mentioned earlier: “2. Only about 15% of each example is used for training.”

Another Important Difference with GANs: Unlike GANs, which aim to achieve a 0.5 probability for real/fake predictions through adversarial training, ELECTRA uses a Maximum Likelihood approach for learning.

To examine whether this approach is indeed more efficient than adversarial training, an ablation study was conducted. Since ELECTRA deals with discrete tokens, reinforcement learning was indirectly applied to train the model adversarially for comparison. (Ultimately, this method did not yield better performance.)

2. Weight sharing

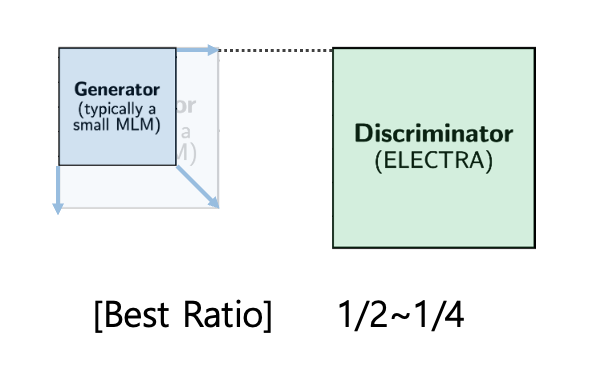

Now, let's examine the size of the Generator and Discriminator models. The optimal ratio suggested in the paper is as follows.

If the size of the Generator is too large compared to the Discriminator, it generates replaced tokens too accurately, causing the Discriminator to fail at distinguishing them and preventing effective learning.

Additionally, the two models share the Input Embedding of the Generator for the following reasons:

- Improved Training Efficiency

By sharing the Embedding between the Generator and Discriminator, there’s no need for each network to learn separate Embeddings. This reduces memory usage and saves computational resources, speeding up training, especially when working with large vocabulary corpora. - Consistent Representation Learning

When the Generator and Discriminator use the same Embedding, both networks learn a consistent vocabulary representation. This alignment allows fake tokens generated by the Generator and input tokens processed by the Discriminator to be represented in the same semantic space, making it easier for the Discriminator to distinguish between real and fake tokens.

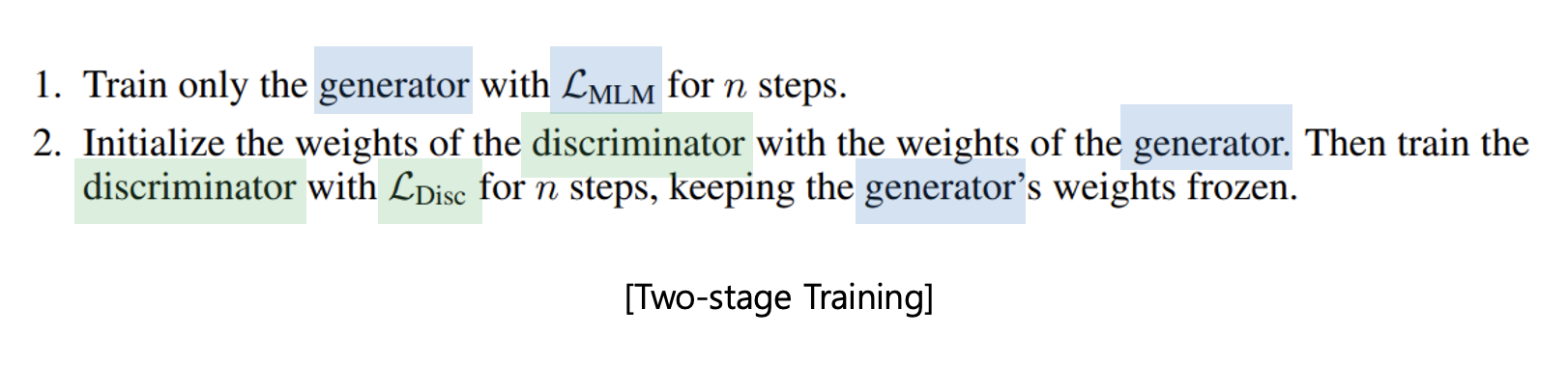

To verify if minimizing the loss with this approach was indeed effective, an alternative algorithm was applied instead of minimizing the above combined loss function for testing purposes.

The method is shown in the figure above. As a result, effective learning did not occur, as the Generator started training first. The researchers identified the primary cause as the Generator producing replaced tokens too accurately, making them indistinguishable.

Experiment results

Now, let's look at the experimental results comparing performance with other models.

The key metrics are as follows:

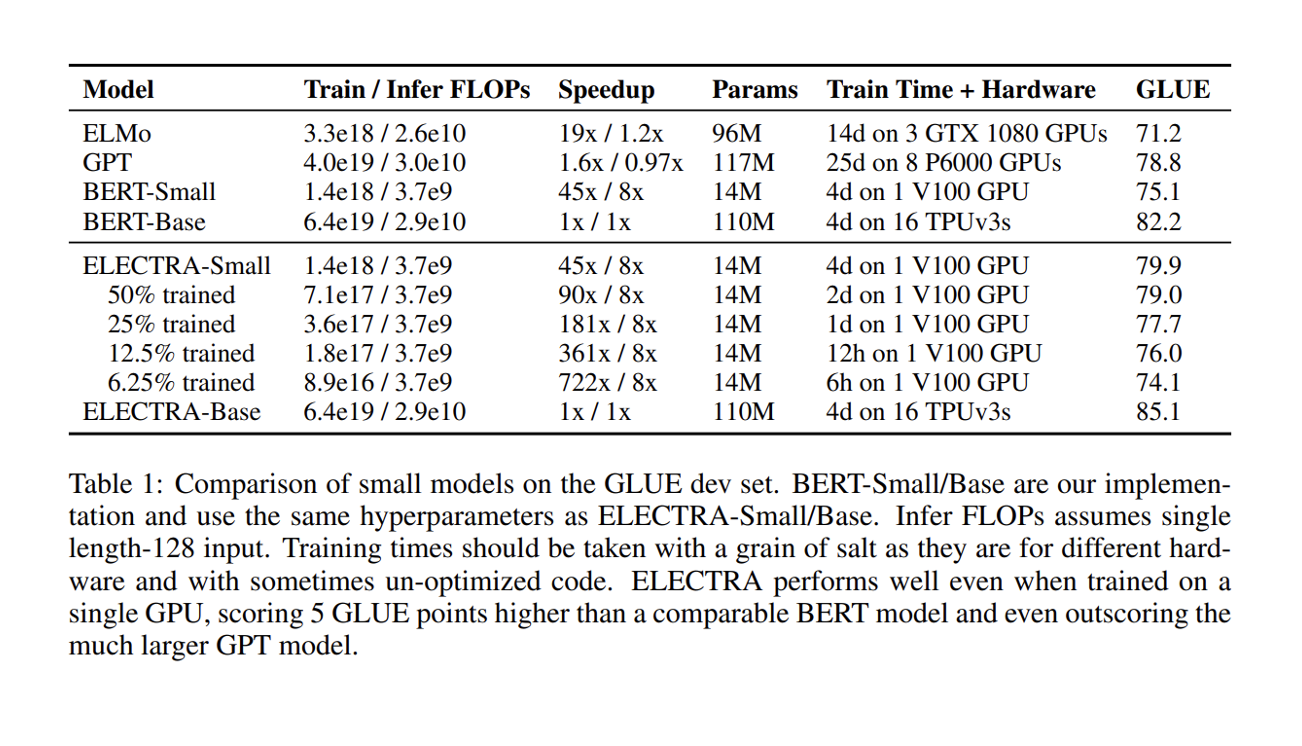

- Train / Infer FLOPs

- This indicates the FLOPs (floating-point operations) required for each model's training and inference. While BERT-Small and ELECTRA-Small use similar FLOPs, ELECTRA achieves higher performance than BERT with fewer FLOPs.

- ELECTRA-Base uses the same training FLOPs (6.4e19) as BERT-Base but surpasses BERT in GLUE scores.

- Speedup

- Although BERT-Base and ELECTRA-Base have the same computational load and speed, ELECTRA-Base is more efficient as it achieves higher performance with the same resources.

- Params (Number of Parameters)

- This metric indicates model size. Both ELECTRA-Small and BERT-Small have 14M parameters, while ELECTRA-Base and BERT-Base each have 110M parameters.

- Train Time + Hardware

- This represents the time and hardware needed to train each model. ELECTRA-Small takes 4 days on a single V100 GPU, whereas ELMo and GPT require multiple GPUs and longer training times.

- ELECTRA matches or surpasses BERT in performance with the same training time and hardware requirements.

- GLUE Score

- This is a measure of each model's performance on the GLUE development set. ELECTRA-Small scores 79.9, outperforming BERT-Small (75.1), and ELECTRA-Base scores 85.1, exceeding BERT-Base (82.2).

From the figure above, we can see that under the same conditions, ELECTRA trains more efficiently.

Now, let’s check whether this efficiency also holds for larger models.

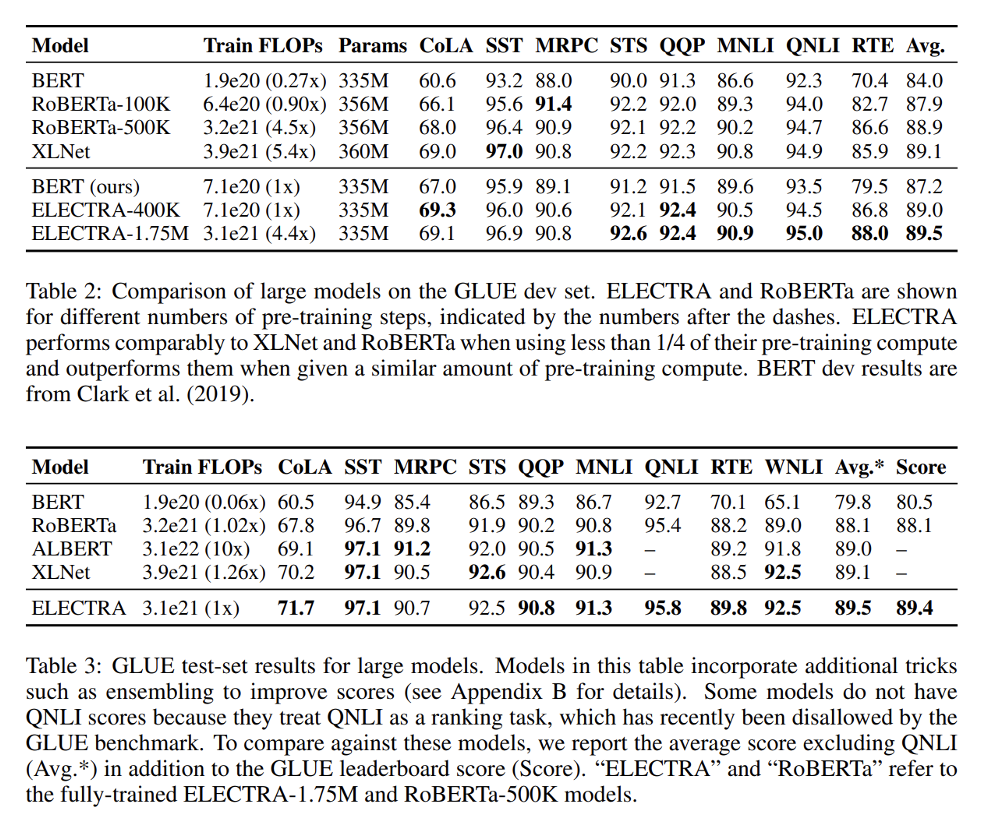

Table 2 presents metrics evaluating performance on GLUE’s sub-tasks (CoLA, SST, MRPC, STS, QQP, MNLI, QNLI, RTE, WNLI).

A notable point is that ELECTRA-400K demonstrates approximately 5 times greater training efficiency than XLNet while maintaining a similar average score, with only a 0.1 difference.

Additionally, Table 3 shows that for larger models, ELECTRA achieves a higher GLUE score.

Ablation study

Let’s now examine whether the two methods described above truly contributed to improving the efficiency and performance of ELECTRA.

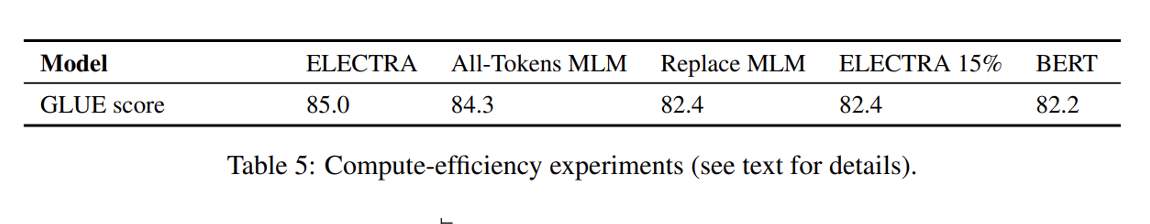

- ELECTRA 15%

- Previously, we mentioned that, unlike BERT, ELECTRA does not accumulate loss solely from masked tokens (15%) but instead from all tokens. To verify whether accumulating loss across all tokens truly impacts training performance, an experiment was conducted with ELECTRA 15%, which only accumulates loss from replaced tokens (15%).

- Replaced MLM

- This model was tested to determine if switching from the MLM approach to the Replaced Token Detection approach was effective. It uses the traditional MLM model but replaces masked tokens with samples generated by the Generator.

- All-Tokens MLM

- This model is a hybrid between BERT and ELECTRA, where instead of accumulating loss from only 15% of masked tokens in the MLM model, loss is accumulated across all tokens.

The results, as shown in the table above, indicate a performance ranking of ELECTRA > All-Token MLM > Replace MLM > ELECTRA 15%.

This suggests that, rather than the Replaced Token Detection method alone, the approach of accumulating loss across all tokens has a greater impact on improving ELECTRA’s training performance and efficiency. In other words, the ability to learn from all tokens, rather than just a subset of masked tokens, appears to be the primary factor driving ELECTRA’s enhanced effectiveness.

Comments for Paper

Reading this paper, I found it interesting that, unlike traditional NLP tasks that prioritize performance metrics without considering training efficiency, this study made an effort to improve training efficiency.

Additionally, the idea of transitioning from the traditional MLM approach to the RTD method was innovative, and it’s commendable that the authors successfully implemented architectural ideas inspired by GANs. However, there are several aspects worth considering:

1. Explanation of the Evaluation Datasets

In Figure 8, we see that models such as XLNet and RoBERTa outperform ELECTRA in certain GLUE sub-tasks like CoLA, SST, and MRPC. The paper, however, does not discuss this in detail and only mentions the average GLUE score.

Curious about this, I explored the CoLA, SST, and MRPC datasets. CoLA assesses grammatical acceptability, while RTE (mistakenly labeled as RTT in the Korean version) evaluates Recognizing Textual Entailment. Other tasks (e.g., SST-2, MNLI, QQP) involve sentiment analysis, sentence similarity, and multi-sentence reasoning, which are comparatively less demanding in terms of linguistic entailment or complex logical inference.

This led me to think that ELECTRA might face difficulties in achieving high generalization performance on textual entailment tasks. Possible causes could include overfitting due to dataset limitations, but I believe it would have been beneficial if the paper had explored this issue further

2. Complex Model Structure

ELECTRA employs a GAN-like structure, training with both a Generator and Discriminator, making it more complex to implement and tune compared to BERT. This complexity could make fine-tuning and parameter adjustments more challenging

3. Limitations in Generator Performance

ELECTRA’s Generator is designed as a small model to predict replacement tokens. However, if the Generator’s performance is suboptimal, the quality of the generated fake tokens may decline. This could lead to the Discriminator learning only simple patterns, which may negatively impact the model’s overall performance.

4. Data Dependency

While ELECTRA enables efficient training by allowing all tokens to be utilized through the Discriminator, it is highly dependent on the quality of the pre-training data. Insufficient or biased data could distort the Discriminator’s learning and potentially degrade the model’s overall performance.

Conclusion

In conclusion, while ELECTRA demonstrates impressive efficiency and performance, certain limitations exist in terms of structural complexity and application scope. I would like to close this paper review with these observations.